The dataset used is stock market data of the Tata Consultancy Services (TCS) India over the last two decades (2000 – 2019). Here, we are exploring how to predict the VWAP (Volume Weighted Average Price). VWAP is a trading benchmark used by many traders. VWAP gives the average price the stock has traded at throughout the day, based on both volume and price. Many traders take positions based on the VWAP because of which it becomes very important to predict the value of VWAP.

Data Preparation

# Install the package using the following statement

# Import the required packages

#Load the dataset

#Converting Date into DateTime format

# Displaying the summary of the data

#Checking for the missing values

#Visualizing the locations of the missing data

# Removing missing columns which are also not an important feature.

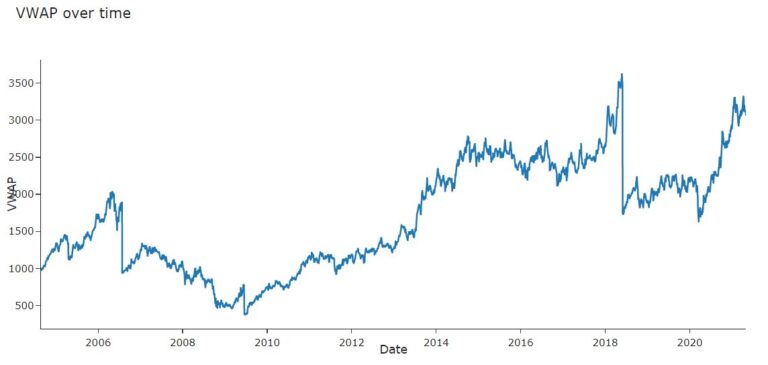

#Exploratory Data Analysis

#Plot VWAP(Volume Weighted Average Price) over time.

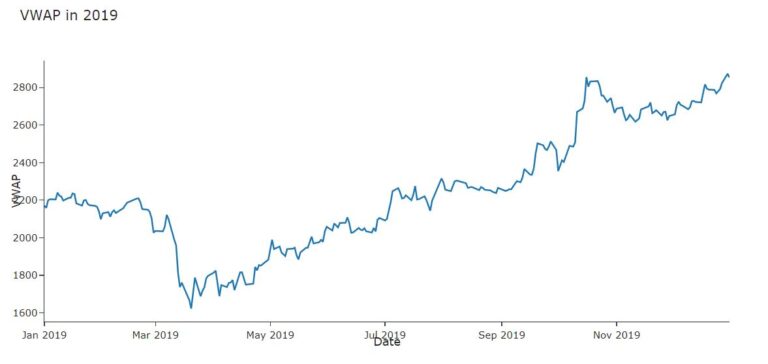

#VWAP 2019

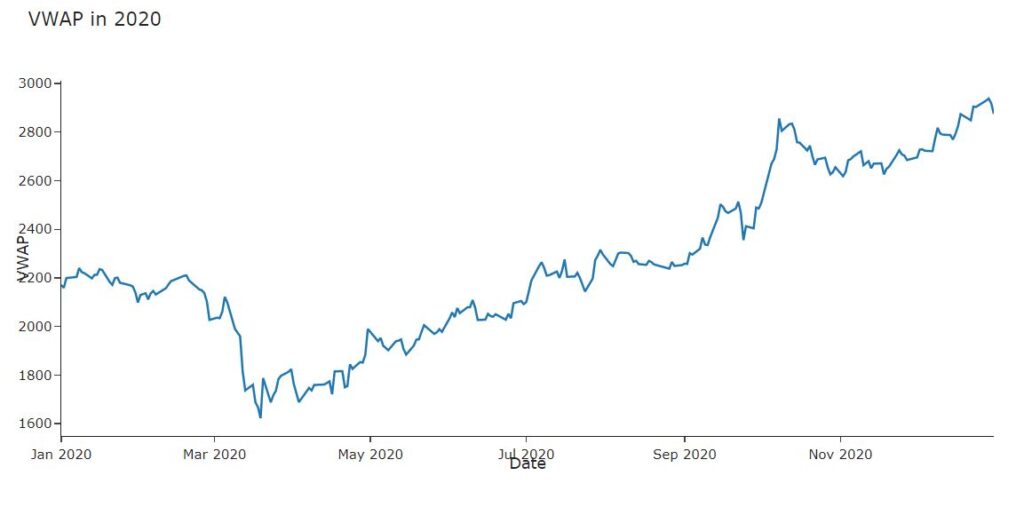

#VWAP 2020

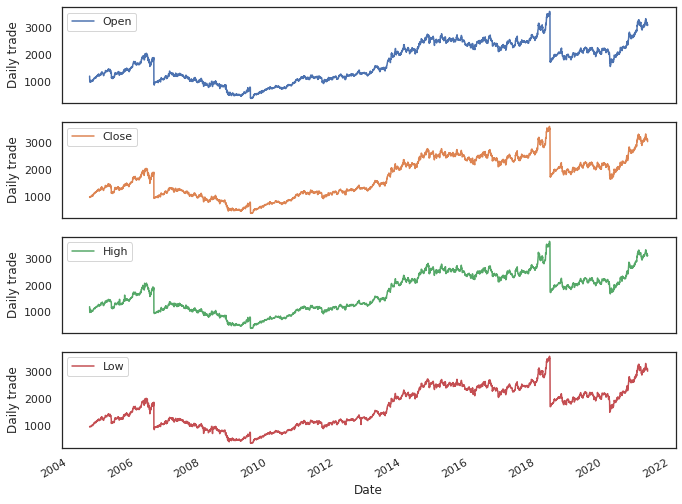

# Open, close,High, low prices over time

#Open, close, High, low all are following the same pattern

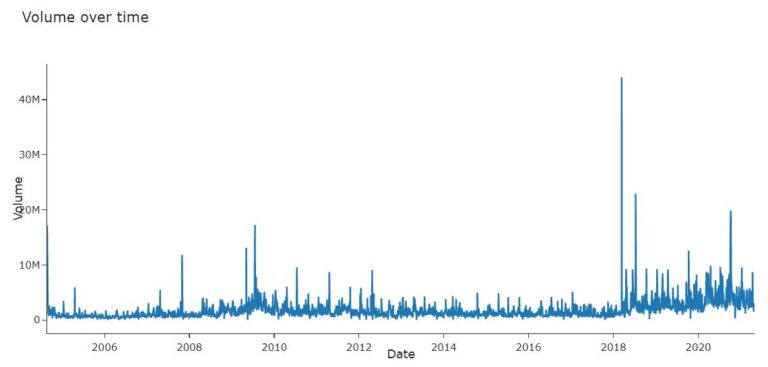

# Plot Volume over time

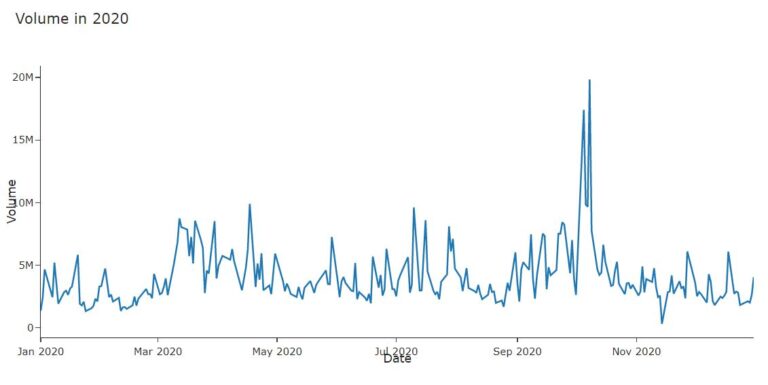

#Plot Volume in 2020

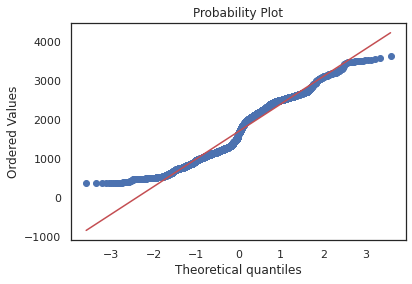

#Q-Q plot of VWAP is used to determine whether the dataset is distributed a certain way

#Dicky Fuller Test

The Augmented Dickey-Fuller test is a type of statistical test called a unit root test.

The intuition behind a unit root test is that it determines how strongly a time series is defined by a trend It uses an autoregressive model and optimizes an information criterion across multiple different lag values.

The null hypothesis of the test is that the time series can be represented by a unit root, that it is not stationary (has some time-dependent structure). The alternate hypothesis (rejecting the null hypothesis) is that the time series is stationary.

Null Hypothesis (H0): If failed to be rejected, it suggests the time series has a unit root, meaning it is non-stationary. It has some time dependent structure.

Alternate Hypothesis (H1): The null hypothesis is rejected; it suggests the time series does not have a unit root, meaning it is stationary. It does not have time-dependent structure.

We interpret this result using the p-value from the test. A p-value below a threshold (such as 5% or 1%) suggests we reject the null hypothesis (stationary), otherwise a p-value above the threshold suggests we fail to reject the null hypothesis (non-stationary).

p-value > 0.05: Fail to reject the null hypothesis (H0), the data has a unit root and is non-stationary. p-value <= 0.05: Reject the null hypothesis (H0), the data does not have a unit root and is stationary.

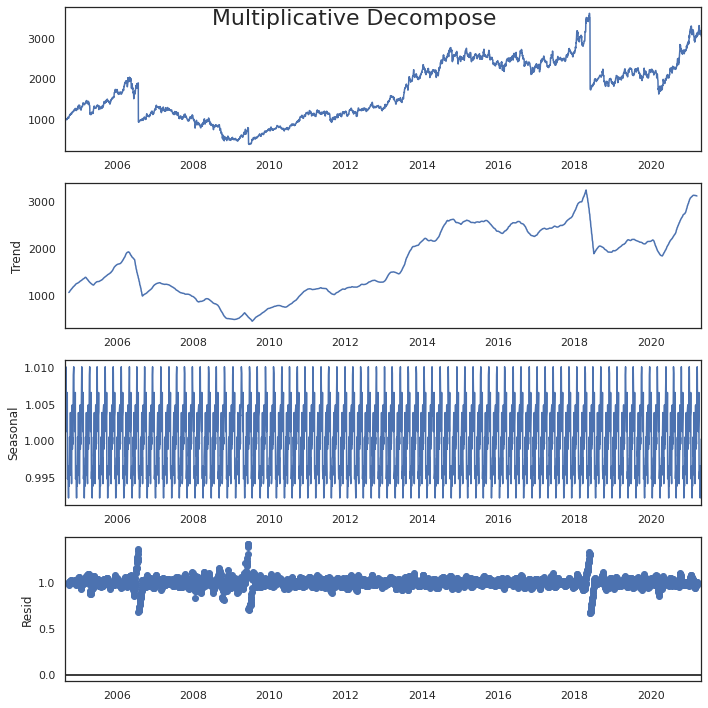

#Seasonal Decompose

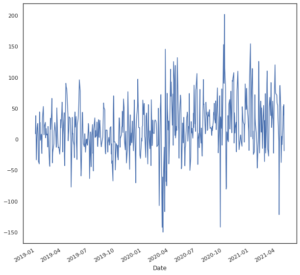

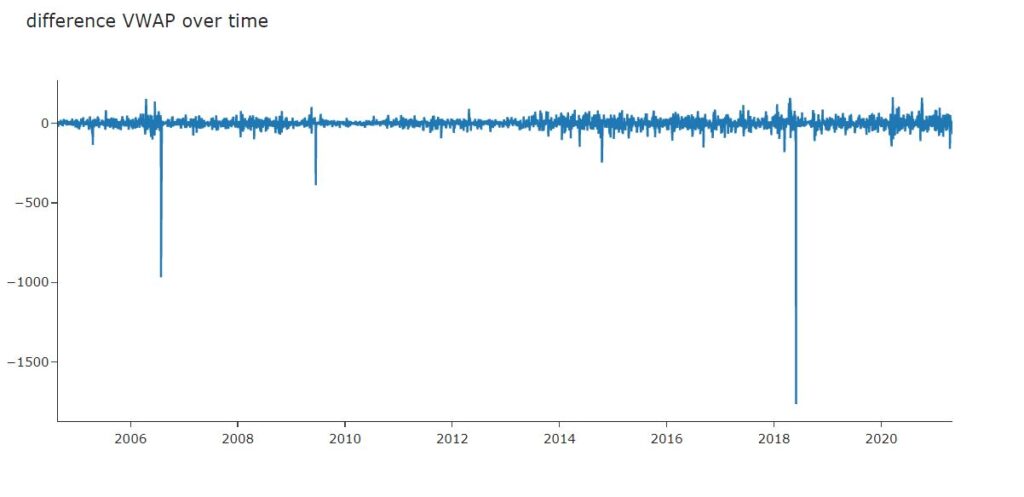

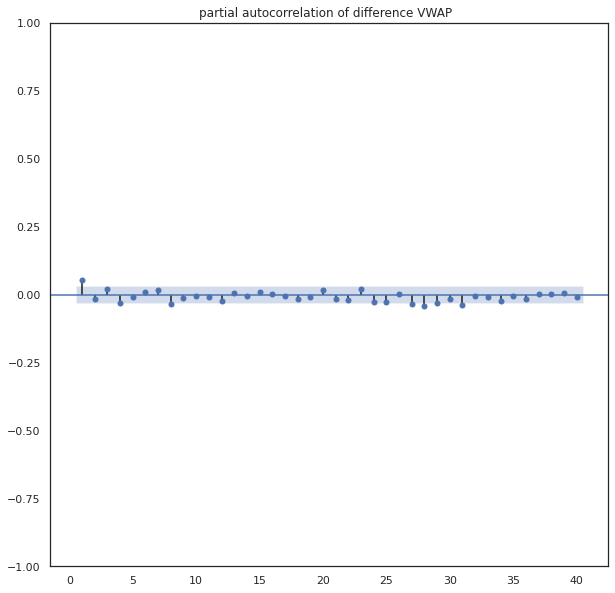

#Convert Stationary into Non-Stationary

# Differencing

There is no need to convert the time series data into stationary data. This is done only for the purpose knowing how to check stationarity and how to convert non-stationary data into stationary data.

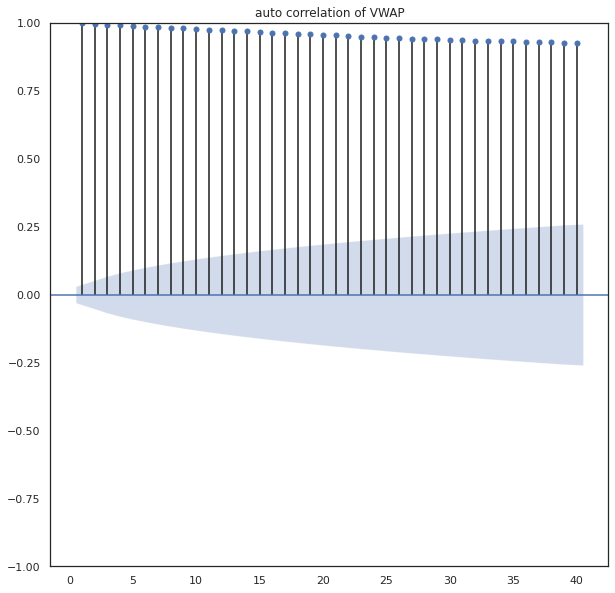

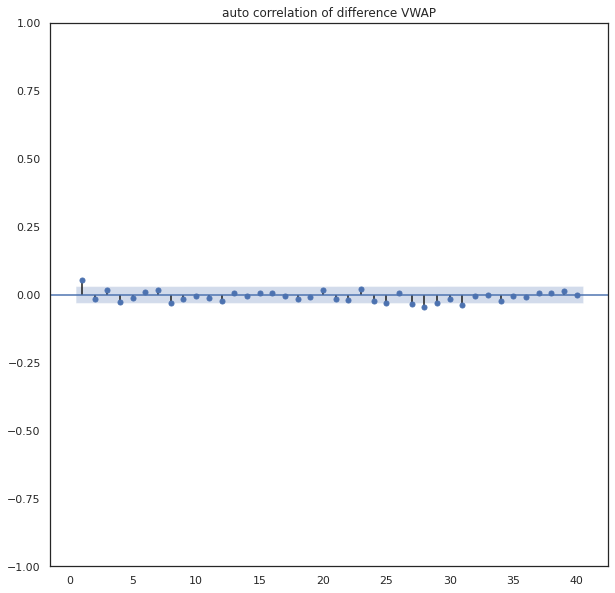

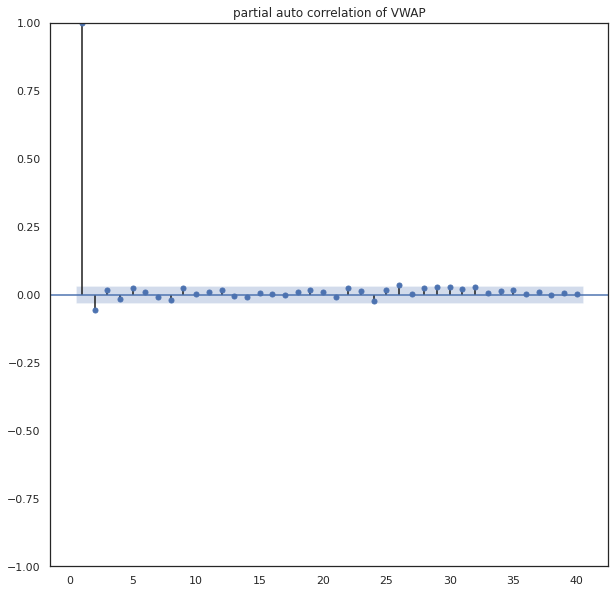

# Autocorrelation and partial autocorrelation plots

#Auto correlation of VWAP

# Feature Engineering

Adding lag values of High, Low, Volume, Turnover, will use three sets of lagged values, one previous day, one looking back 7 days and another looking back 30 days as a proxy for last week and last month metrics.

df.head()

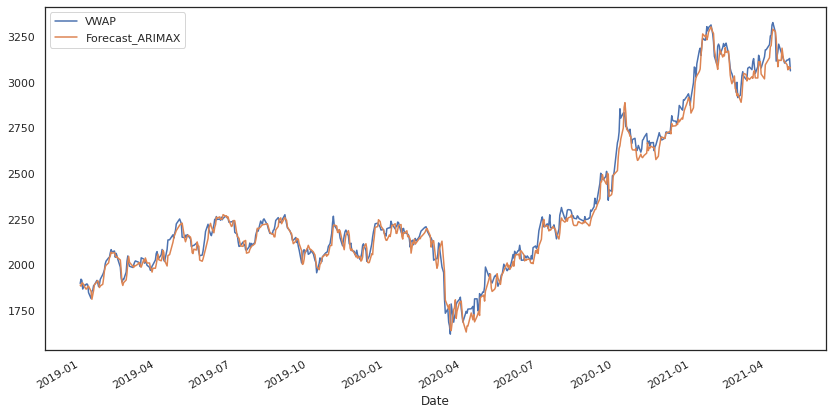

#Auto ARIMA model

#Analyzing residuals